Comparing training speed and qualitative results with Watch-It-Move.

Abstract

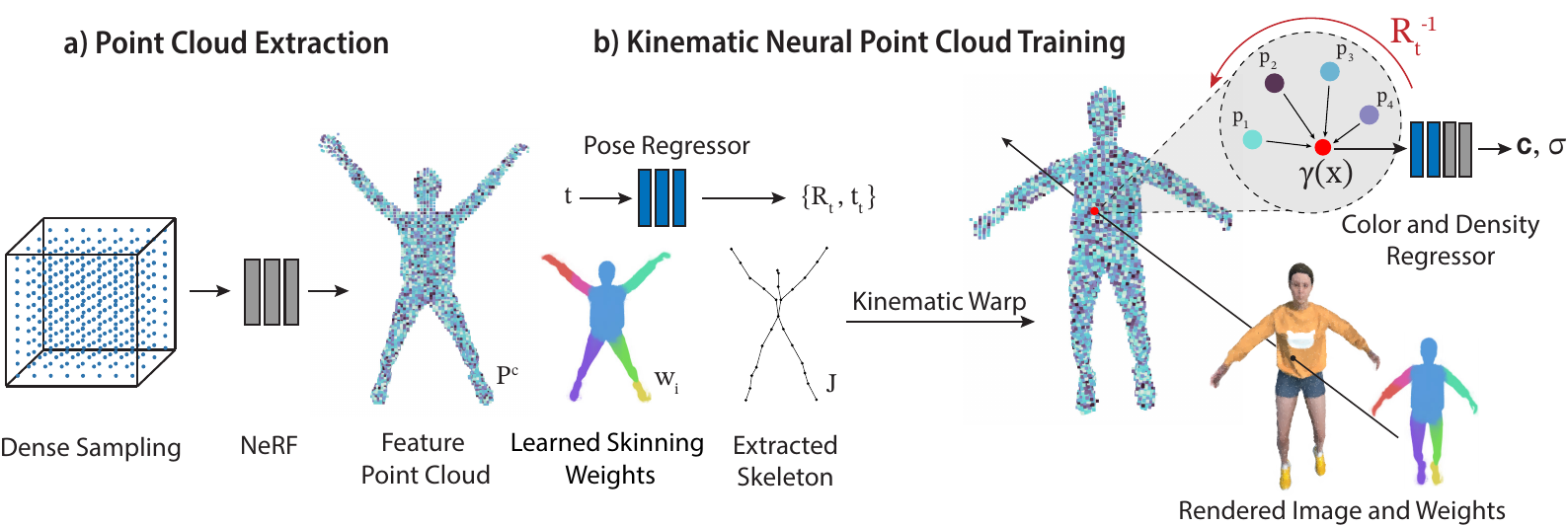

Dynamic Neural Radiance Fields (NeRFs) achieve remarkable visual quality when synthesizing novel views of time-evolving 3D scenes. However, the common reliance on backward deformation fields makes reanimation of the captured object poses challenging. Moreover, the state of the art dynamic models are often limited by low visual fidelity, long reconstruction time or specificity to narrow application domains. In this paper, we present a novel method utilizing a point-based representation and Linear Blend Skinning (LBS) to jointly learn a Dynamic NeRF and an associated skeletal model from even sparse multi-view video. Our forward-warping approach achieves state-of-the-art visual fidelity when synthesizing novel views and poses while significantly reducing the necessary learning time when compared to existing work. We demonstrate the versatility of our representation on a variety of articulated objects from common datasets and obtain reposable 3D reconstructions without the need of object-specific skeletal templates.

Results

Reconstruction

Our method is able to reconstruct the motion of the object faithfully, while utilizing neural points allows for reconstruction of fine details.

Render of training poses.

Reconstruction & Learned Skinning Weights

Even without post-processing, object parts are correctly segmented according to the motion present in the scene.

Render of training poses.

Simplified Skinning Weights & Reposing

With a simple post-processing step we are further able to merge over-segmented object parts, yielding a better segmentation of the object and a more controllable 3D representation.

Render of novel poses and unseen views.

Results on ZJU

We further show results on real-world data.

BibTeX

@article{uzolas2024template,

title={Template-free Articulated Neural Point Clouds for Reposable View Synthesis},

author={Uzolas, Lukas and Eisemann, Elmar and Kellnhofer, Petr},

journal={Advances in Neural Information Processing Systems},

volume={36},

year={2024}

}